.NET JIT and CLR - Joined at the Hip

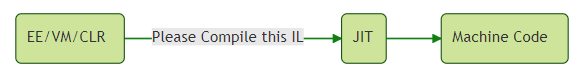

05 Jul 2018 - 2019 wordsI’ve been digging into .NET Internals for a while now, but never really looked closely at how the ‘Just-in-Time’ (JIT) compiler works. In my mind, the interaction between the .NET Runtime and the JIT has always looked like this:

Nice and straight-forward, the CLR asks the JIT to compile some ‘Intermediate Language’ (IL) code into machine code and the JIT hands back the bytes when it’s done.

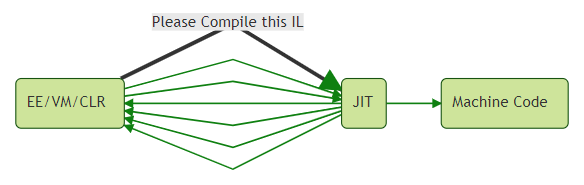

However, it turns out the interaction is much more complicated, in reality it looks more like this:

The JIT and the CLR’s ‘Execution Engine’ (EE) or ‘Virtual Machine’ (VM) work closely with one another, they really are ‘joined at the hip’.

The rest of this post will explore the interaction between the 2 components, how they work together and why they need to.

The JIT Compiler

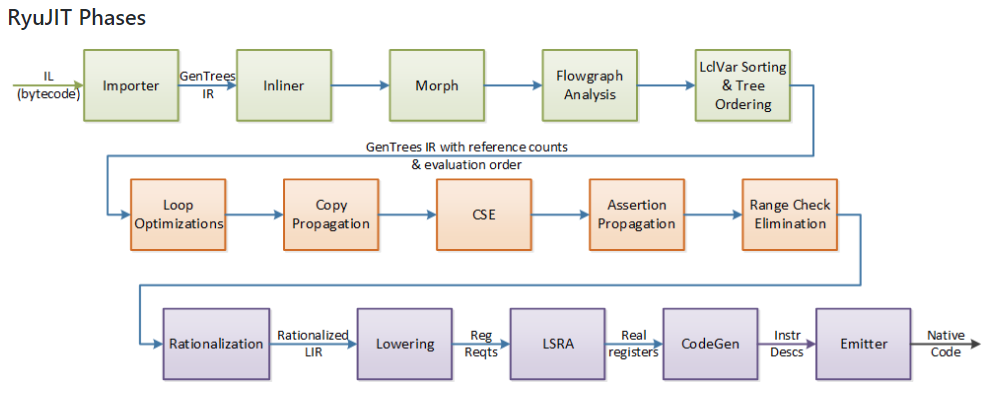

As a quick aside, this post will not be talking about the internals of the JIT compiler itself, if you want to find out more about how that works I recommend reading the fantastic overview in the BOTR and this excellent tutorial, where this very helpful diagram comes from:

After all that, if you still want more, you can take a look at the ‘JIT’ section in the ‘Hitchhikers-Guide-to-the-CoreCLR-Source-Code’.

Components within the CLR

Before we go any further it’s helpful to discuss how the ‘Common Language Runtime’ (CLR) is actually composed. It’s actually made up of several different components including the VM/EE, JIT, GC and others. The treemap below shows the different areas of the source code, grouped by colour into the top-level sections they fall under. You can clearly see that the VM and JIT dominate as well as ‘mscorlib’ which is the only component written in C#.

You can hover over an individual box to get more detailed information and can click on the different radio buttons to toggle the sizing (LOC/Files/Commits)

Note: This treemap is from my previous post ‘Hitchhikers-Guide-to-the-CoreCLR-Source-Code’ which was written over a year ago, so the exact numbers will have changed in the meantime.

You can also see these ‘components’ or ‘areas’ reflected in the classification scheme used for the CoreCLR GitHub issues (one difference is that area-CodeGen is used instead of JIT).

The CLR and the JIT Compiler

Onto the main subject, just how do the CLR and the JIT compiler work together to transform a method from IL to machine code? As always, the ‘Book of the Runtime’ is a good place to start, from the ‘Execution Environment and External Interface’ section of the RyuJIT Overview:

RyuJIT provides the just in time compilation service for the .NET runtime. The runtime itself is variously called the EE (execution engine), the VM (virtual machine) or simply the CLR (common language runtime). Depending upon the configuration, the EE and JIT may reside in the same or different executable files. RyuJIT implements the JIT side of the JIT/EE interfaces:

ICorJitCompiler– this is the interface that the JIT compiler implements. This interface is defined in src/inc/corjit.h and its implementation is in src/jit/ee_il_dll.cpp. The following are the key methods on this interface:

compileMethodis the main entry point for the JIT. The EE passes it aICorJitInfoobject, and the “info” containing the IL, the method header, and various other useful tidbits. It returns a pointer to the code, its size, and additional GC, EH and (optionally) debug info.getVersionIdentifieris the mechanism by which the JIT/EE interface is versioned. There is a single GUID (manually generated) which the JIT and EE must agree on.getMaxIntrinsicSIMDVectorLengthcommunicates to the EE the largest SIMD vector length that the JIT can support.ICorJitInfo– this is the interface that the EE implements. It has many methods defined on it that allow the JIT to look up metadata tokens, traverse type signatures, compute field and vtable offsets, find method entry points, construct string literals, etc. This bulk of this interface is inherited fromICorDynamicInfowhich is defined in src/inc/corinfo.h. The implementation is defined in src/vm/jitinterface.cpp.

So there are 2 main interfaces, ICorJitCompiler which is implemented by the JIT compiler and allows the EE to control how a method is compiled. Second there is ICorJitInfo which the EE implements to allow the JIT to request information it needs during compilation.

Let’s now look at these interfaces in more detail.

EE ➜ JIT ICorJitCompiler

Firstly, we’ll examine ICorJitCompiler, the interface exposed by the JIT. It’s actually pretty straight-forward and only contains 7 methods:

CorJitResult __stdcall compileMethod (..)void clearCache()BOOL isCacheCleanupRequired()void ProcessShutdownWork(ICorStaticInfo* info)void getVersionIdentifier(..)unsigned getMaxIntrinsicSIMDVectorLength(..)void setRealJit(..)

Of these, the most interesting one is compileMethod(..), which has the following signature:

virtual CorJitResult __stdcall compileMethod (

ICorJitInfo *comp, /* IN */

struct CORINFO_METHOD_INFO *info, /* IN */

unsigned /* code:CorJitFlag */ flags, /* IN */

BYTE **nativeEntry, /* OUT */

ULONG *nativeSizeOfCode /* OUT */

) = 0;

The EE provides the JIT with information about the method it wants compiled (CORINFO_METHOD_INFO) as well as flags (CorJitFlag) which control the:

- Level of optimisation

- Whether the code is compiled in

DebugorReleasemode - If the code needs to be ‘Profilable’ or support ‘Edit-and-Continue’

- Alignment of loops, i.e. should they be aligned on byte-boundaries

- If

SSE3/SSE4should be used - and many other scenarios

The final parameter is a reference to the ICorJitInfo interface, which is covered in the next section.

JIT ➜ EE ICorJitHost and ICorJitInfo

The APIs that the EE has to implement to work with the JIT are not simple, there are almost 180 functions or callbacks!!

| Interface | Method Count |

|---|---|

| ICorJitHost | 5 |

| ICorJitInfo | 19 |

| ICorDynamicInfo | 36 |

| ICorStaticInfo | 118 |

| Total | 178 |

Note: The links take you to the function ‘definitions’ for a given interface. Alternatively all the methods are listed together in this gist.

ICorJitHost makes available ‘functionality that would normally be provided by the operating system’, predominantly the ability to allocate the ‘pages’ of memory that the JIT uses during compilation.

ICorJitInfo (class ICorJitInfo : public ICorDynamicInfo) contains more specific memory allocation routines, including ones for the ‘GC Info’ data, a ‘method/funclet’s unwind information’, ‘.rdata and .pdata for a method’ and the ‘exception handler blocks’.

ICorDynamicInfo (class ICorDynamicInfo : public ICorStaticInfo) provides data that can change from ‘invocation to invocation’, i.e. the JIT cannot cache the results of these method calls. It includes functions that provide:

- Thread Local Storage (TLS) index

- Function Entry Point (address)

- EE ‘helper functions’

- Address of a Field

- Constructor for a

delegate - and much more

Finally, ICorStaticInfo, which is further sub-divided up into more specific interfaces:

| Interface | Method Count |

|---|---|

| ICorMethodInfo | 28 |

| ICorModuleInfo | 9 |

| ICorClassInfo | 49 |

| ICorFieldInfo | 7 |

| ICorDebugInfo | 4 |

| ICorArgInfo | 4 |

| ICorErrorInfo | 7 |

| Diagnostic methods | 6 |

| General methods | 2 |

| Misc methods | 2 |

| Total | 118 |

Because the interface is nicely composed we can easily see what it provides. The bulk of the functions are concerned with information about a module, class, method or field. For instance the JIT can query the class size, GC layout and obtain the address of a field within a class. It can also learn about a method’s signature, find it’s parent class and get ‘exception handling’ information (the full list of methods are available in this gist).

These interfaces and the methods they contain give a nice insight into what information the JIT requests from the runtime and therefore what knowledge it requires when compiling a single method.

Now, let’s look at the end-to-end flow of a couple of these methods and see where they are implemented in the CoreCLR source code.

EE ➜ JIT getFunctionEntryPoint(..)

First we’ll look at a method where the EE provides information to the JIT:

- /src/inc/corinfo.h (shared definition)

- /src/jit/lower.cpp (method call from the JIT)

- /src/vm/jitinterface.h (VM definition)

- /src/vm/jitinterface.cpp (implementation in the VM)

- /src/zap/zapinfo.cpp (ZAP/NGEN implementation)

- /src/jit/ICorJitInfo_API_wrapper.hpp (wrapper)

- /src/ToolBox/superpmi/superpmi/icorjitinfo.cpp (SuperPMI implementation)

JIT ➜ EE reportInliningDecision()

Next we’ll look at a scenario where the data flows from the JIT back to the EE:

- /src/inc/corinfo.h (shared definition)

- /src/jit/inline.cpp (method call from the JIT)

- /src/vm/jitinterface.h (VM definition)

- /src/vm/jitinterface.cpp (implementation in the VM)

- /src/zap/zapinfo.cpp (ZAP/NGEN implementation)

- /src/jit/ICorJitInfo_API_wrapper.hpp (wrapper)

- /src/ToolBox/superpmi/superpmi/icorjitinfo.cpp (SuperPMI implementation)

SuperPMI tool

Finally, I just want to cover the ‘SuperPMI’ tool that showed up in the previous 2 scenarios. What is this tool and what does it do? From the CoreCLR glossary:

SuperPMI - JIT component test framework (super fast JIT testing - it mocks/replays EE in EE-JIT interface)

So in a nutshell it allows JIT development and testing to be de-coupled from the EE, which is useful because we’ve just seen that the 2 components are tightly integrated.

But how does it work? From the README:

SuperPMI works in two phases: collection and playback. In the collection phase, the system is configured to collect SuperPMI data. Then, run any set of .NET managed programs. When these managed programs invoke the JIT compiler, SuperPMI gathers and captures all information passed between the JIT and its .NET host. In the playback phase, SuperPMI loads the JIT directly, and causes it to compile all the functions that it previously compiled, but using the collected data to provide answers to various questions that the JIT needs to ask. The .NET execution engine (EE) is not invoked at all.

This explains why there is a SuperPMI implementation for every method that is part of the JIT <-> EE interface. SuperPMI needs to ‘record’ or ‘collect’ each interaction with the EE and store the information so that it can be ‘played back’ at a later time, when the EE isn’t present.

Discuss this post on Hacker News or /r/dotnet

Further Reading

As always, if you’ve read this far, here’s some further information that you might find useful:

- Mono EE <-> JIT Interface

- CoreRT implementation of the JIT/EE Interface (in C#)

- /src/Native/jitinterface/jitinterface.h (auto-generated, how?)

- /src/JitInterface/src/CorInfoImpl.cs (partial class, the other part is in CoreInfoBase.cs)

- /src/JitInterface/src/CorInfoBase.cs (auto-generated by ThunkGenerator, using jitinterface.h)

- /src/JitInterface/src/ThunkGenerator

- /src/JitInterface/src/ThunkGenerator/ThunkInput.txt